Storage of data is one major task that requires large infrastructures to be set up on-premises. A vast range of cloud based storage solutions is provided by Amazon Web Services (AWS). These services allow customers to store and manage various types of data and files in the cloud, including structured and unstructured data, files, images, videos, and backups.

AWS provides a variety of storage services to meet different use cases, including object storage, block storage, file storage, and backup storage. Some of the most commonly used AWS storage services include Amazon S3 (Simple Storage Service), Amazon EBS (Elastic Block Store), Amazon EFS (Elastic File System), Amazon Glacier, and AWS Storage Gateway.

These storage services provide highly scalable, durable, secure, and cost-effective storage solutions that can be used to meet various storage needs, ranging from storing files, hosting websites, running databases, and archiving data. They also provide features such as high availability, backup and disaster recovery, data encryption, and access controls, making them suitable for use in various industries and use cases.

Amazon S3 (Simple Storage Service)

Amazon S3 (Simple Storage Service) is a highly scalable, durable, and secure object storage service provided by AWS. It allows customers to store and retrieve any amount of data from anywhere on the web, making it suitable for a wide range of use cases, including website hosting, mobile applications, data backup and archival, and big data analytics.

Some key features of Amazon S3 include:

Scalability: Amazon S3 can store an unlimited amount of data, and its infrastructure can scale to accommodate virtually any storage requirement.

Durability: Amazon S3 stores data redundantly across multiple devices and facilities, ensuring that data is highly durable and available.

Security: Amazon S3 provides robust security features, including data encryption, access control, and integration with AWS Identity and Access Management (IAM) to manage user access.

Availability: Amazon S3 offers high availability and guarantees 99.99% uptime, making it a reliable storage solution for critical applications and data.

Cost-effective: Amazon S3 offers a pay-as-you-go pricing model, where customers only pay for the storage they use, with no upfront costs or minimum fees.

Storage Classes in S3

Amazon S3 provides several storage classes that allow customers to choose the most appropriate storage option for their data based on their access patterns and cost requirements. Each storage class has its performance, availability, and pricing characteristics, allowing customers to optimize their costs and performance based on their specific needs.

Different types of S3 storage classes are as follows:

S3 Standard: This is the default storage class for S3 and is designed for frequently accessed data that requires high performance and low latency. It offers high durability, availability, and throughput and is suitable for storing data that needs to be accessed frequently.

S3 Standard-Infrequent Access (S3 Standard-IA): This storage class is designed for data that is accessed less frequently but requires the same level of durability and availability as S3 Standard. S3 Standard-IA offers lower storage costs compared to S3 Standard, but retrieval fees are higher.

S3 One Zone-Infrequent Access (S3 One Zone-IA): This storage class is similar to S3 Standard-IA but stores data in a single Availability Zone, making it less resilient to outages than S3 Standard-IA. S3 One Zone-IA is suitable for data that can be recreated easily or is non-critical.

S3 Intelligent-Tiering: This storage class uses machine learning to automatically move data between two access tiers based on changing access patterns. It provides the same level of durability and availability as S3 Standard but offers lower costs by moving data to the most cost-effective storage class.

S3 Glacier: This storage class is designed for long-term data archival and offers the lowest storage costs among all S3 storage classes. Retrieval times are longer than S3 Standard and S3 Standard-IA, and there are additional retrieval fees for retrieving data.

S3 Glacier Deep Archive: This is the lowest-cost storage class in S3 and is designed for data that is rarely accessed, but needs to be retained for a long time. It offers the lowest storage costs, but retrieval times are longer than S3 Glacier and there are additional retrieval fees for retrieving data.

Versioning and MFA delete on S3

Amazon S3 (Simple Storage Service) provides two key features that enhance data protection and compliance: versioning and MFA (Multi-Factor Authentication) delete.

- Versioning: S3 versioning is a feature that allows customers to keep multiple versions of an object in the same bucket. When versioning is enabled, S3 automatically saves a new version of the object every time it is modified or deleted. This ensures that all previous versions of the object are retained and can be accessed or restored at any time.

Versioning provides several benefits, including:

Data protection: Versioning protects against accidental or malicious data deletion, overwriting, or corruption. It ensures that all versions of an object are retained, allowing customers to recover from unintended changes or data loss.

Compliance: Versioning is also useful for compliance with regulations or audit requirements that require data retention or change tracking.

Simplified backup and recovery: Versioning provides a simple and efficient way to backup and restore data, as customers can retrieve previous versions of an object or restore deleted objects.

- MFA delete: MFA delete is a feature that adds an extra layer of security to prevent accidental or malicious object deletion. When MFA delete is enabled, S3 requires the use of a valid MFA token to delete an object or change the versioning state of a bucket.

MFA delete provides additional security benefits, including:

- Protection against accidental or unauthorized object deletion: MFA delete prevents unauthorized or accidental object deletion, as it requires an additional factor of authentication to perform these actions.

To enable versioning or MFA delete on an S3 bucket, customers can use the AWS Management Console, CLI, or SDKs. They can also set up lifecycle policies to automatically transition objects to lower cost storage classes or delete them after a specified time.

S3 Lifecycle Policy

Amazon S3 lifecycle policies enable you to define rules for automatically transitioning your objects to different storage classes or deleting them after a specified period. This can help you to optimize your storage costs and ensure that your data is stored and managed efficiently over its lifecycle.

A lifecycle policy consists of one or more rules, each of which specifies a condition and an action. The condition determines which objects the rule applies to, based on the object's key name, creation date, or other metadata. The action specifies what should happen to the object, such as transitioning it to a different storage class or deleting it.

Eg: If you have data that you need to keep for long-term storage but don't need to access frequently, you could create a rule that transitions objects to the Glacier storage class after a certain period. This can help you reduce your storage costs even further.

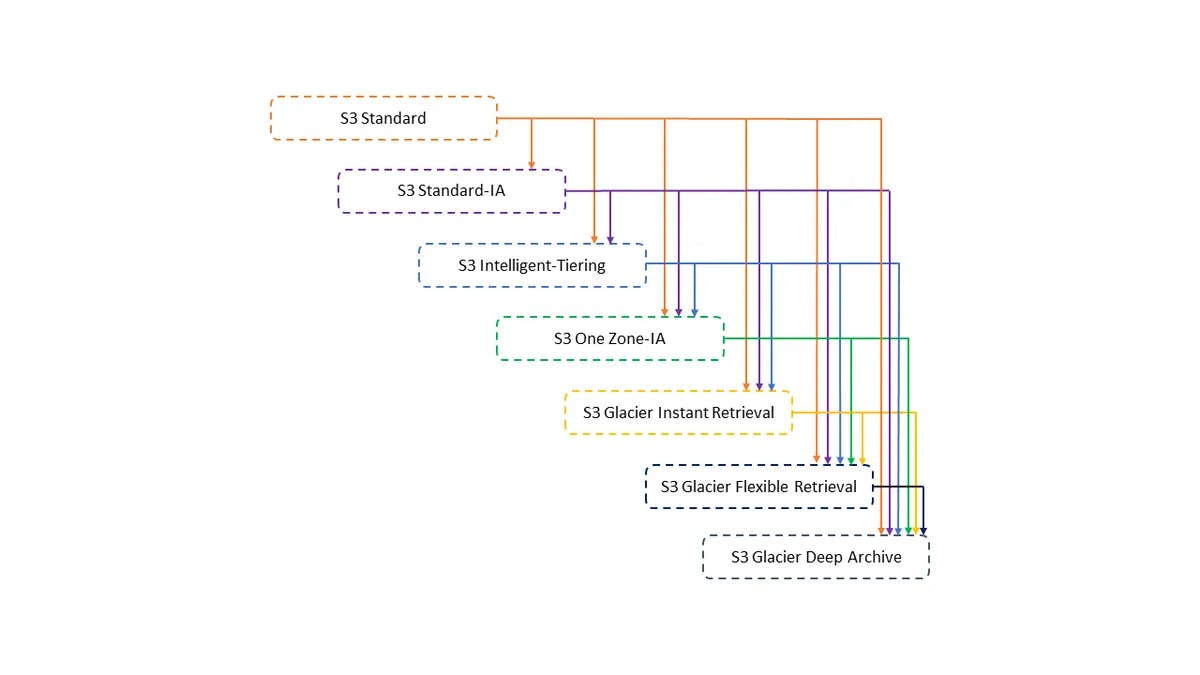

S3 objects follow waterfall model which is shown below in the image for the transition rule. That is, We can transition object which is stored in S3 standard class to all other class but vice versa is not true.

Lifecycle policies can be applied at the bucket level or the object level, and you can create up to 1,000 rules per bucket. You can manage lifecycle policies using the S3 console, the AWS CLI, or the S3 API.

S3 Glacier and S3 Glacier Deep Archive Storage

Amazon S3 Glacier and S3 Glacier Deep Archive are two storage services offered by Amazon Web Services (AWS) for the long-term archival of data.

Amazon S3 Glacier:

Amazon S3 Glacier is a cloud storage service designed for data archiving and long-term backups, where data is infrequently accessed but needs to be stored securely for a long period.

It's a cost-effective storage option, with low storage costs, but additional retrieval fees for data retrieval and transfer.

S3 Glacier provides three different retrieval options: Expedited, Standard, and Bulk. The expedited retrieval option allows users to access their data within 1-5 minutes, while the standard retrieval option takes 3-5 hours. The bulk retrieval option can take several hours to complete but is the most cost-effective option.

Data stored in S3 Glacier is encrypted both in transit and at rest, and it's designed to provide durability and availability of 99.999999999% (eleven nines).

Amazon S3 Glacier Deep Archive:

S3 Glacier Deep Archive is a storage class within S3 Glacier that provides the lowest cost storage option for data that is rarely accessed but needs to be retained for a long time (e.g., 7-10 years).

It's designed for use cases such as regulatory and compliance requirements, long-term data retention, and digital preservation.

The retrieval times for S3 Glacier Deep Archive are longer than those of S3 Glacier, with retrieval options taking anywhere from 12-48 hours.

The storage costs for S3 Glacier Deep Archive are the lowest within AWS, but retrieval fees are higher than those of S3 Glacier.

EBS and EFS

EBS (Elastic Block Storage)

EBS(Elastic Block Storage) provides persistent block-level storage volumes for use with EC2 instances. EBS volumes are like virtual hard drives that can be attached to EC2 instances to provide additional storage. EBS volumes are designed for transactional workloads and are suitable for running databases, enterprise applications, and boot volumes for EC2 instances.

It's good to know the benefits of EBS, you will get a clear idea of its use cases. Benefits of EBS include :

High performance and low latency: EBS volumes provide consistent, low-latency performance for transactional workloads.

Durability and availability: EBS volumes are designed for high durability and availability, with the ability to automatically replicate data within an Availability Zone.

Snapshots and backups: EBS volumes can be easily backed up or restored using snapshots, which are point-in-time copies of the volume.

EFS (Elastic File System)

EFS is a fully managed NFS (Network File System) file storage service that provides scalable, shared file storage for use with EC2 instances. EFS volumes are designed for workloads that require shared access to files across multiple instances or users, such as web serving, content management, or big data analytics.

Benefits of EFS include :

Scalability and flexibility: EFS can scale up or down automatically to accommodate changing workloads and provides the flexibility to create and manage multiple file systems with different performance characteristics.

Shared access: EFS supports concurrent access to a file system from multiple EC2 instances or users, making it suitable for workloads that require shared file access.

Durability and availability: EFS provides high durability and availability by automatically replicating data across multiple Availability Zones.

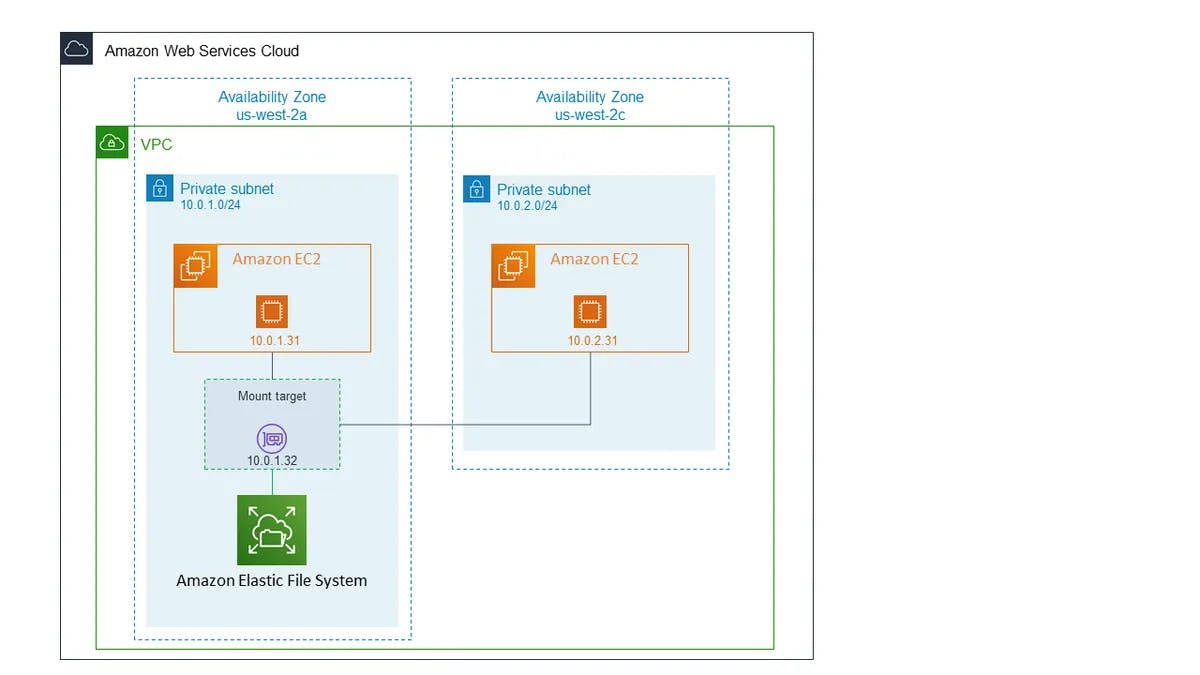

Refer to the below image, you can see that two ec2 instances are connected to single EFS storage. If you want to share same data between multiple instances for some computing purpose then EFS is the best choice.

Amazon FSx

Amazon FSx is a fully managed file storage service offered by AWS. FSx provides scalable, high-performance file storage for Windows and Linux workloads, making it suitable for a variety of applications, including databases, analytics, and machine learning.

FSx offers two different file storage options:

Amazon FSx for Windows File Server: FSx for Windows File Server provides file storage for Windows workloads, with support for the SMB (Server Message Block) protocol. This makes it easy to migrate existing Windows file shares to the cloud and provides seamless integration with Windows-based applications.

Amazon FSx for Lustre: FSx for Lustre provides high-performance file storage for Linux workloads, with support for the Lustre file system. This makes it suitable for high-performance computing (HPC) workloads, such as simulations, rendering, and scientific computing.

AWS Storage Gateway

AWS Storage Gateway is a hybrid cloud storage service offered by AWS. It enables customers to seamlessly and securely connect their on-premises IT environment to the AWS cloud storage services.

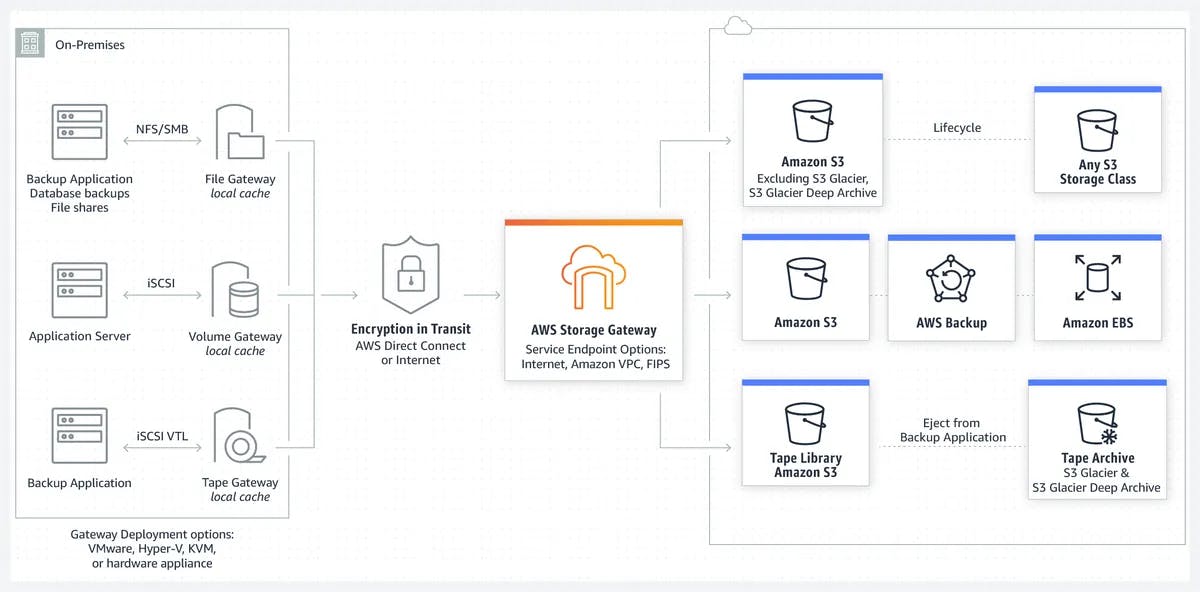

Storage Gateway supports three different types of storage, which are:

File Gateway: File Gateway provides a cloud-backed file share that can be mounted on your on-premises environment as an NFS or SMB file share. This file share is backed by Amazon S3, and supports features such as lifecycle policies, versioning, and cross-region replication. File Gateway also supports features such as caching and local disk storage for improved performance.

Volume Gateway: Volume Gateway provides cloud-backed block storage that can be mounted as an iSCSI device on your on-premises environment. Volume Gateway supports two different modes of operation:

Cached Volumes: In this mode, frequently accessed data is cached on-premises, while infrequently accessed data is stored in Amazon S3.

Stored Volumes: In this mode, all data is stored on-premises, and asynchronously backed up to Amazon S3 for disaster recovery purposes.

Tape Gateway: Tape Gateway provides a virtual tape library (VTL) that enables customers to backup their data to virtual tapes stored in Amazon S3 or Amazon Glacier. Tape Gateway supports industry-standard backup applications and enables customers to use their existing backup workflows.

You will get a visual architecture idea by referring to the below image.

So far we discussed just a brief insight of storage services offered by AWS. The intent of this blog is to familiarize you with different types of storage services. Make sure to select the right one for your use depending on the use case. For example, if you want to host a website or store images/videos, then S3 storage is the right pick for this. Or let's say you want a shared file storage space for your instances then EFS is the right choice.